WARNING: This script is work in progress

Many chapters are still empty or incomplete.

More and more people code nowadays.

Learning to program is becoming increasingly important in many curricula, both at school and at university. In addition, the emergence of modern and easy to learn programming languages, such as Python, is lowering the entry threshold for taking first steps in programming and attracting novices of all ages from various backgrounds.

As such, programming is not a skill mastered by a small group of experts anymore, but is instead gaining importance in general education, comparable to mathematics or reading and writing.

The idea for this book came up whilst working as a programmer and consultant for ETH Zurich Scientific IT Services. Whether it was during code reviews, code clinics or programming courses for students, researchers, or data scientists, we realized that there is a big need among our clients to advance in programming, and to learn best practices from experienced programmers.

Whilst the first steps in programming focus on getting a problem solved, professional software development is about writing robust, reliable and efficient code, programming in teams, and best practices for writing programs spanning more than a few hundred of lines of code.

Although plenty of established books about such advanced topics exist, most of them are comprehensive and detailed, and require programming experience in advanced topics such as object oriented programming. Beyond that, these books often target programmers already making a living from software development.

On the other hand, introductions to the topics we offer exist but fragmented on the internet. Regrettably theses are not collected and offered in one single place and also are not selected and presented uniformly accordingly to our beliefs.

Regrettably, our clients’ motivation to progress is usually constrained by available time, and by other tasks having higher priority. Answers along the lines of "I fully agree that my code would benefit from your suggested changes, but I have no time for this" are no exception. As a result, we often do not recommend existing books, but instead focus on the most important concepts and on pragmatic, efficient and basic instructions to benefit our clients as efficiently as possible in the face of the given constraints.

This book follows this way of thinking. Instead of trying to provide an all-embracing and deep introduction into the principles of professional software development, our attempt is to select topics we regard as being the most important, and to offer sound introductions and explanations to the underlying concepts and principles. The reader should learn enough to get started and be able to dig deeper by learning from more advanced resources. We also attempt to split the book into chapters with minimal interdependence, so that readers can pick parts according to their interests and existing knowledge.

1. About this book

2. The programming process

3. General principles and techniques

4. Clean code

You might not believe it, but the following program is valid Python code and

prints Hello world!:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43 # hello_world.py

(lambda _, __, ___, ____, _____, ______, _______, ________:

getattr(

__import__(True.__class__.__name__[_] + [].__class__.__name__[__]),

().__class__.__eq__.__class__.__name__[:__] +

().__iter__().__class__.__name__[_:][_____:________]

)(

_, (lambda _, __, ___: _(_, __, ___))(

lambda _, __, ___:

bytes([___ % __]) + _(_, __, ___ // __) if ___ else

(lambda: _).__code__.co_lnotab,

_ << ________,

(((_____ << ____) + _) << ((___ << _____) - ___)) + (((((___ << __)

- _) << ___) + _) << ((_____ << ____) + (_ << _))) + (((_______ <<

__) - _) << (((((_ << ___) + _)) << ___) + (_ << _))) + (((_______

<< ___) + _) << ((_ << ______) + _)) + (((_______ << ____) - _) <<

((_______ << ___))) + (((_ << ____) - _) << ((((___ << __) + _) <<

__) - _)) - (_______ << ((((___ << __) - _) << __) + _)) + (_______

<< (((((_ << ___) + _)) << __))) - ((((((_ << ___) + _)) << __) +

_) << ((((___ << __) + _) << _))) + (((_______ << __) - _) <<

(((((_ << ___) + _)) << _))) + (((___ << ___) + _) << ((_____ <<

_))) + (_____ << ______) + (_ << ___)

)

)

)(

*(lambda _, __, ___: _(_, __, ___))(

(lambda _, __, ___:

[__(___[(lambda: _).__code__.co_nlocals])] +

_(_, __, ___[(lambda _: _).__code__.co_nlocals:]) if ___ else []

),

lambda _: _.__code__.co_argcount,

(

lambda _: _,

lambda _, __: _,

lambda _, __, ___: _,

lambda _, __, ___, ____: _,

lambda _, __, ___, ____, _____: _,

lambda _, __, ___, ____, _____, ______: _,

lambda _, __, ___, ____, _____, ______, _______: _,

lambda _, __, ___, ____, _____, ______, _______, ________: _

)

)

)

If you want to understand how this program works, continue reading here.

|

Writing such obfuscated code maybe fun and challenging. It also may increase your reputation as a coding wizard, but you should definitely invest your mental energy better than into writing such code if you want to be regarded as a professional software developer. |

One common rule is You read code more often than you write it. Let it be because you want to find a bug, you want to extend or improve code, or because others want to learn from it.

So always consider that you might want to understand your own code in weeks or months and, as a non-selfish and team oriented programmer, that this is also the case for others.

Another idea behind good code, is that your code should communicate your solutions ideas and concepts. So code not only instructs your computer to solve a problem, but is a means of communication with humans also.

So, write code for humans, not for computers !

What we present and introduce in this chapter should not be considered as hard rules. As for almost all rules reasonable exceptions exist in real life.

4.1. How does clean code look like ?

What are the hallmarks of readable code ? Following principles are the basis for clean code. Although we list a few examples how to realize these principles, more elaborate explanations and techniques will follow in the following sections:

-

Little scrolling needed. To understand how a "unit" in your code works should require no or little scrolling. This e.g. can be supported by writing short functions and by keeping interdependent parts together.

-

Minimal level of indentation: Loops and branches introducing more than two levels of indentation can be hard to understand, especially if they don’t fit on one single page on your screen. Writing functions can circumvent this. This is also mentioned in the Zen of Python. Newspapers having narrow columns follow the same principle: short and many rows are preferred over long and little rows.

-

Clear naming: Well chosen names are always up-to-date with the code and should support understandability of your code and not confuse the reader. Good names also can avoid unneeded comments and thus contribute to shorter code.

-

Directly understandable: This includes avoiding programming tricks which result in short one liners but will require extra mental work when you or others read this. The code layout should also follow your solution strategy.

-

No surprises: As an example names and comments should never contradict your approach or confuse and cause deep wrinkles on the readers forehead.

-

Consistency: Among st other things, naming of variables and functions should be consistent. So either use

indexas a variable name in all places oridx, but not both ! -

Don’t litter: Keeping out-commented lines of code or unused functions in your code only increases code size and decreases understandability. Use a Version Control System instead.

4.2. General comments about code layout

4.2.1. The usefullness of empty lines

Don’t underestimate the effect of using empty lines to structure your code. An empty line separating two blocks of code guides your eye and makes it easier to focus on such a block. So group related fragments together and separate them with single empty lines. But don’t overuse this !

4.2.2. Keep related statements close together.

Try to structure your code in a way that the flow of operations is as linear as possible. Also try to reduce scattering of related operations, such as initializing and using a specific object.

The following example already uses empty lines to structure the code. But operations such as opening the result file and writing to it, as well as initializing lists and appending data, are scattered:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18 # grouping_bad.py

with open("results.txt", "w") as fh:

filtered_numbers = []

numbers = [2, 4, 6, 8, 9, 10] (1)

results = []

for number in numbers:

if number % 2 == 0:

filtered_numbers.append(number) (2)

else:

break

for number in filtered_numbers:

results.append(number + 1) (3)

results.append(number ** 2)

for result in results:

print(result, file=fh) (4)

| 1 | The actual data we want to process is defined in the middle of the script. |

| 2 | Your eyes have to move up multiple lines to check the initial value and type of filtered_numbers. |

| 3 | Even more lines to scan to find the initial value and type of results. |

| 4 | You have to read the top of the file to check the used file name and opening mode. Opening and writing is scattered over the script. |

The distinct steps

-

define input data

-

filter data

-

compute results

-

write results

are clearly visible in the reordered code now:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18 # grouping_good.py

numbers = [2, 4, 6, 8, 9, 10] (1)

filtered_numbers = [] (2)

for number in numbers:

if number % 2 == 0:

filtered_numbers.append(number)

else:

break

results = [] (3)

for number in filtered_numbers:

results.append(number + 1)

results.append(number ** 2)

with open("results.txt", "w") as fh: (4)

for result in results:

print(result, file=fh)

| 1 | The data to process now stands out at the beginning of the script. |

| 2 | Initialization of filtered_numbers is now closer to the following

filtered_numbers.append. |

| 3 | Same for results. |

| 4 | The chosen file name and file opening mode are now also close to the usage of the file handle. |

4.2.3. PEP 8

A code style guide gives general advice on how to format your code to improve readability. If all developers involved in a project follow the same style the code is more consistent, and readable for all people involved.

The most important rules of PEP 8 are:

-

Use multiples of four spaces for indentation.

-

Don’t mix tabs and spaces for indentation. Most IDEs and code editors allow automatic conversion, maybe you have to enable this in your editors settings.

-

Only use lowercase letters, underscores and digits for variable and function names. This is also called snake case. E.g.

input_file_folder. For class names use camel case, this means uppercase characters for the first character of words, else lowercase letters. E.g.InputFileHandler. For constants user uppercase characters and underscores, e.g.DAYS_PER_WEEK. -

Use spaces around assignment

=, comparisons like!=and algebraic operations like+. But no spaces around=for named function arguments or declaring functions with default values. -

Use one space after

, -

no spaces after

[or before], same for(and). -

Maximum 79 characters per line. This is often weakened to 99 characters, but not more !

-

Two empty lines between functions and classes, one empty line between methods.

This is an example for non PEP 8 compliant code:

1

2

3

4

5

6

7

8 # non_pep8.py

class adder:

def __init__(self,x,y):

self.x=x

self.y=y

def addNumbers(numberOne,numberTwo):

return numberOne+numberTwo

And this is the same code after reformatting for PEP 8 compliance:

1

2

3

4

5

6

7

8

9

10 # pep8.py

class Adder:

def __init__(self, x, y):

self.x = x

self.y = y

def add_numbers(number_one, number_two):

return number_one + number_two

| As code in a book reads differently than in a code editor, some of the examples in this book are not PEP 8 compliant, e.g. we use often only one empty line to separate function definitions. |

| Use tools like pep8 to check your code from time to time. Some IDEs also indicate PEP 8 violations or offer tools to format your code for PEP 8 compliance. |

4.2.4. Don’t postpone naming decisions and renaming

Don’t postpone choosing good names when you write code the first times. Don’t postpone rename in case names get out of date during development. Not adapting names to introduced changes can turn your names into lies !

4.3. About good names

This chapter introduces some basic rules for good names. Let it be for variables, functions or also classes.

Can you spot the error in the following example ?

1

2

3 # bad_name.py

def compute(a, b):

return a + b

Compare this to the following code where we use expressive names for the function and the variables:

1

2

3 # good_name.py

def rectangle_area(height, width):

return height + width

Giving functions and variables unambiguous names expressing the programmers

intention can reduce the need for comments. You could try to fix the

first example above by adding a comment that compute computes

the area of an rectangle. The second version does not require a

comment, is shorter and directly understandable. So prefer names like

first_names over the_list or fn.

Encoding the unit of a physical quantity in a variable name makes sense to

reduce errors

and to avoid comments. So is time_since_event not the worst name but

time_since_event_in_seconds will be much clearer (if the unit is fixed and

does not change based on other factors).

I use either idx or index as name or part of names in the same project.

This reduces mental mapping and also searching for already used

names. I also use singular vs plural for distinguishing elements and a collection

of elements. E.g. for first_name in first_names:.

Your brain prefers pronounceable names when reading code. So prefer full words over inconsistent abbreviations.

The term "iteration index" is a term you can use in spoken language when

you communicate with humans, thus prefer the variable name iteration_index over

index_iteration.

If you encode the data type of a variable in its name, you have to change the names

as soon as the data type changes. Else your code will cause confusion.

So better use names instead of name_list, or name_to_phone_number instead

of phone_number_dict.

In the olden days, when Fortran 77 was a modern programming language and the length of variable and function names where restricted, the so called Hungarian notation was a commonly used convention how to encode types into names. As said, this was in the olden days.

Why use a_name if name fulfills the same purpose ? Why income_amount if

income is sufficient ? If a spelling error sneaks into a name, fix this instead

of reproducing the same error all over the code, you might want to search for

the name.

Choose names as general as possible. In the improved

example above we could have used def window_area(window_height,

window_width) instead, because this is how we use this function in our current

house construction project. The choice of more general names here make this

function reusable within other projects without modification.

Using window in the names here also introduces noise as the computation here

is not specific to a window, and the computation will also only be correct if

the window is rectangular.

As classes in most cases represent entities, and methods often are considered

as actions, one should try to choose nouns for class names, and verbs for

method names.

For functions verbs should be preferred, but sometimes nouns are also ok.

E.g. we used rectangle_area above and not compute_rectangle_area, as

area = rectangle_area(h0, w0) reads well and adding compute_ would

introduce noise.

A common convention is to use i, j, and k as variable in a counting loop,

names as n and m for natural numbers, eg. for the length of a list, or for

the dimensions of a matrix. This is ok for names of local variables which are

only used within a small range of lines of code.

4.4. Explanatory variables

Introducing extra variables can enhance readability by far. So you can name sub-expressions of larger mathematical formulas, and avoid error prone duplications. If the names are well chosen extra variables introduce extra information for the reader and thus enhance understandability.

The following code assumes that config is a nested dictionary holding the

configuration settings for some data analysis:

1

2

3

4

5

6 # repeated_expressions.py

def analyze(config, data):

eps = config['numerics']['eps']

max_iteration_count = config['numerics']['max_iteration_count']

max_relative_error = config['numerics']['max_relative_error']

...

By introducing a meaningful variable numerics for a repeated sub-expression

we reduce code duplication, thus the probability of typing mistakes. The result

is also more pleasant to read. In case you rename the key for the numerical

parameters section, only one change is required:

1

2

3

4

5

6

7 # with_sub_expressions.py

def analyze(config, data):

numerics = config['numerics']

eps = numerics['eps']

max_iteration_count = numerics['max_iteration_count']

max_relative_error = numerics['max_relative_error']

...

Another example using an explanatory variable to simplify mathematical expressions:

1

2

3

4

5

6

7

8

9

10

11

12 # quadratic_before.py

import math

def solve_quadratic(p, q):

"""solves x^2 + p x + q == 0"""

if p * p - 4 * q < 0:

return None, None

x1 = -p / 2 + math.sqrt(p * p - 4 * q) / 2

x2 = -p / 2 - math.sqrt(p * p - 4 * q) / 2

return x1, x2

assert solve_quadratic(-3, 2) == (2, 1)

The term \$p^2 - 4q\$ has a mathematical meaning and appeared three

times. This is the improved version using a variable determinant:

1

2

3

4

5

6

7

8

9

10

11

12

13 # quadratic_after.py

import math

def solve_quadratic(p, q):

"""solves x^2 + p x + q == 0"""

determinant = p * p - 4 * q

if determinant < 0:

return None, None

x1 = -p / 2 + math.sqrt(determinant) / 2

x2 = -p / 2 - math.sqrt(determinant) / 2

return x1, x2

assert solve_quadratic(-3, 2) == (2, 1)

Literal values like 24 or 60 in the context of time calculations may be

understandable, but the intention of mem_used / 1073741824 requires a comment:

1 mem_used /= 1073741824 # bytes to gb

Using a constant variable for such magic values is the better solution,

especially when such calculations appear multiple times in different

parts of your code. Usually constants are declared at the top of a script

after the import statements.

1

2

3 BYTES_PER_GB = 1073741824

...

mem_used /= BYTES_PER_GB

4.5. About good comments

The less comments you have to write the better. This is a bold statement and the idea of reducing the amount of comments might be contrary to your current coding practice.

The reason for this is that comments can turn into lies if you change your code but don’t adapt your comments. This is also an example for avoidable redundancy.

The trick is to reduce the amount of comments needed by choosing good names and decomposing your code into small functions.

This comment is redundant and describes what we already know:

1 tolerance = tolerance / 60 # divide by 60

But this comment explains the intention:

1 tolerance = tolerance / 60 # seconds to minutes

When writing comments you can be deep in the programming process and comments will appear understandable. Often this is a misjudgement, because your mind is familiar with the current context. So review your comments and consider if you or others will be able to understand them weeks or months later.

Don’t comment your functions by writing comment lines before the def line.

Python offers a better mechanism: If you declare a string after def and before the

first actual statement of the body of the function this string is called

docstring and automatically contributes to Pythons built-in help system:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20 # docstring_example.py

def gcd(m, n):

"""computes greatest common divisor of two numbers (1)

args:

m (int) - first number

n (int) - second number

returns:

gcd (int) - greatest common divisor of m and n (2)

"""

while m != n:

if m > n:

m -= n

else:

n -= m

return m

assert gcd(24, 15) == 3 (3)

assert gcd(1024, 17) == 1

help(gcd)

| 1 | First line of docstring |

| 2 | Last line of docstring |

| 3 | Quick checks if function actually works |

And this is the output from help(gcd):

1

2

3

4

5

6

7

8

9

10 $ python docstring_example.py

Help on function gcd in module __main__:

gcd(m, n)

computes greatest common divisor of two numbers

args:

m (int) - first number

n (int) - second number

returns:

gcd (int) - greatest common divisor of m and n

Often we deactivate code by turning it into comments. This is fine for experiments, but unused code should be removed as early as possible. The same holds for unused functions. The idea of Version Control Systems is to track changes of your source code over time and to allow restoring files from older versions.

4.6. About good functions

Functions are fundamental building blocks for larger programs and we already demonstrated the beneficial effects of using functions to avoid comments. Here we discuss a few recommendations for writing good functions additionally to choosing good names.

To decide if a function name is a good name, and if the way you decompose your problem into functions is well chosen, should mainly be done from the callers perspective.

Ask yourself if the code sections calling your functions are readable, and if another function name or other arguments could improve this. Ask yourself also if the way you call and combine your functions appers to be intuitive and straightforward.

The later introduced technique of test driven development supports this practice.

Lets start with an example of a function not doing one thing: The function

check_prime below asks for a number, checks if its prime, prints some output

and returns a result.

1

2

3

4

5

6

7

8

9

10

11

12

13 # multifunc.py

def check_prime():

n = int(input("number to check ? "))

divisor = 2

while divisor * divisor <= n:

if n % divisor == 0:

print("no prime")

return False

divisor += 1

print("is prime")

return True

check_prime()

And this is an improved version:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20 # is_prime.py

def is_prime(n): (1)

divisor = 2

while divisor * divisor <= n:

if n % divisor == 0:

return False

divisor += 1

return True

def ask_user_and_check_prime(): (2)

n = int(input("number to check ? "))

if is_prime(n):

print(n, "is prime")

else:

print(n, "is not prime")

assert is_prime(7)

assert not is_prime(8)

assert not is_prime(9)

ask_user_and_check_prime()

| 1 | We extracted a function is_prime which returns a logical

value indicating if the argument n is prime or not. |

| 2 | We renamed check_prime to ask_user_and_check_prime and the function

has now the purpose of the main task. |

Some benefits:

-

is_primeis reusable, the user of this function can decide where the number to check is coming from and if a result should be printed or not. -

is_primebe tested within an automated testing suite. The former version contained a call ofinput, such user interaction makes automated testing difficult.

| The concept of "one thing" is a bit vague and related decisions sometimes require some gut feeling or experience. This concept gets more cleare in combination with the following sections. |

Long functions spanning multiple pages on a screen are hard to understand and require scrolling, whereas short functions are spatially localized and can be grasped easier.

Splitting up code into small functions also introduces more names, and, provided that the names are well chosen, introduces more information for the reader about how the code works and what parts of the code are intended to do.

Another problem introduced by longer functions is that control statements like

if, continue and break result in different execution paths depending on

the input arguments, as demonstrated in the following example:

1

2

3

4

5

6

7

8 def analyze(n):

if n % 2 == 0:

if n > 0:

...

else:

...

else:

...

Here we see different such execution paths for even and odd numbers, and for even numbers we recognize different paths for positive and non-positive numbers.

Implementing the … code parts in separate functions analyze_for_odd_n,

analyze_for_positive_even_n and analyze_for_non_positive_even_n shortens

the function analyze to a few lines and also introduces separate functions

which can be tested independently.

Implementing the … code parts within in analyze directly would result

in one larger function, and testing this

single function would require analysis of all possible paths and how to trigger

them.

This was a simple example, in real life applications understanding all such possible paths can be challenging and sometimes not all of them are understood and tested.

The code of a function should operate on the same level of abstraction. For example:

1

2

3

4

5 def workflow():

configuration = read_configuration()

data = read_data(configuration)

results = process(data, configuration)

write_result(results, configuration)

The other functions then also should follow this principle. E.g.

1

2

3

4

5

6

7

8 def read_data(configuration):

input_path = configuration['input_file']

if input_path.endswith(".csv"):

return read_csv(input_path)

elif input_path.endswith(".xlsx"):

return read_xlsx(input_path)

else:

raise NotImplementedError('no not know how to read {}'.format(input_file))

A function having multiple sections, maybe including comments to separate

and describe the different

sections, like # read data and # prepare data, is a good candidate for

splitting into different functions.

Another common pattern to avoid are so called flag arguments. Such function arguments are usually integers, sometimes strings, which modify the overall behaviour of a function.

This is an example using such a flag argument action

1

2

3

4

5

6

7

8

9 # flag_argument.py

def update(data, item, action):

assert action in (0, 1, 2)

if action == 0:

data['first_name'] = item

elif action == 1:

data['second_name'] = item

elif action == 2:

data['birthday'] = item

Instead we write different functions with appropriate names:

1

2

3

4

5

6

7

8

9 # without_flag_argument.py

def update_first_name(data, item):

data['first_name'] = item

def update_second_name(data, item):

data['second_name'] = item

def update_birthday(item):

data['birthday'] = item

The more arguments a function has, the harder it is to memorize the arguments, their

order and their intention.

This also increases the risk of introducing bugs.

Function calls with many arguments are also hard to read and understand.

A technique to reduce the number of arguments is to group arguments using dictionaries,

as attributes of classes, or using

namedtuple from the standard libraries collections module.

The argument list of the following function is very long:

1

2

3

4

5

6

7

8 # distance_3d_6_args.py

def distance_3d(x0, y0, z0, x1, y1, z1):

dx = x1 - x0

dy = y1 - y0

dz = z1 - z0

return (dx ** 2 + dy ** 2 + dz ** 2) ** .5

distance = distance_3d(1, 2, 3, 3, 2, 1) (1)

| 1 | The function call is hard to understand, especially if function definition and function call are not as close as here. |

If we introduce a new data type Point3D we obtain a function with only two

arguments:

1

2

3

4

5

6

7

8

9

10

11

12 # distance_3d_2_args.py

from collections import namedtuple

Point3D = namedtuple("Point3D", "x y z")

def distance_3d(p1, p2):

dx = p2.x - p1.x

dy = p2.y - p1.y

dz = p2.z - p1.z

return (dx ** 2 + dy ** 2 + dz ** 2) ** .5

distance = distance_3d(Point3D(1, 2, 3), Point3D(3, 2, 1)) (1)

| 1 | The function call now reveals the intention of the arguments. |

Another technique is to use dictionaries to group configuration settings or algorithmic parameters. The drawback is, that your code only documents the available or required keys within the functions implementation, whereas namedtuples or own classes make this more explicit.

The (at the time of writing) upcoming Python 3.7 release introduces data classes which extend the idea of namedtuples and also introduce automatic type checks.

If grouping arguments does not work a function, I recommend to use named function parameters when calling them. This applies also if you use functions from external libraries.

This example from scipy’s documentation demonstrates this:

1

2

3

4

5

6

7

8 from scipy.interpolate import interp1d

import numpy as np

x = np.linspace(0, 10, num=11, endpoint=True) (1)

y = np.cos(-x**2 / 9.0)

f = interp1d(x, y)

f2 = interp1d(x, y, kind='cubic')

| 1 | In numpy 1.14 the function linspace has six arguments, two

are mandatory, the remaining four are optional to override default values.

So choosing named arguments num=11 and endpoint=True here reveals their

intention and is also independent of the arguments order in the definition of

linspace. |

A function without side effects produces always the same result for same arguments, is independent of the environment and also does not change it.

This is the case for purely mathematical functions, but as soon as a function reads from or writes to a file, this criterion is violated. A file which exists on one computer might not exist on another computer, file permissions might be different, etc.

If a function depends on the environment, it might work on one day but not on another day, and if a functions changes the environment, other functions which worked before might now fail. So side effects can introduce some invisible coupling and thus increase complexity which we should keep as low as possible.

Thus functions without side effects are easier to test with unit-tests, easier to understand and debug, and also easier to re-use.

To reduce such side effects:

-

Isolate functions with side effects and keep them small. E.g. avoid functions which interact with the file system, web services or external programs and at the same time perform other operations like checking data for validity or doing some calculations. This overlaps with the concept that a function should only do one thing.

-

Don’t interact with variables outside the functions body. Python has a

globalstatement, but avoid this. While reading only from a global variable is already problematic, this gets even worse if two or more functions communicate over global variables. They are tightly coupled, are hard to re-use in other programs, and are also difficult to understand. If you still feel you need something like global data, look into object oriented programming, the idea of objects is to group such data and operations on it within classes.

Whereas the former ideas also apply for other programming languages, this section is about an issue specific to Python.

Can you guess what the following program computes ?

1

2

3

4

5

6

7 # mutable_default.py

def foo(a=[]): (1)

a.append(1)

return len(a)

print(foo())

print(foo())

Have you been right ?

1

2

3 $ python mutable_default.py

1

2

| 1 | One would assume that a=[] is executed for every call of foo, but

the list here is only created once when Python parses the code and

constructs the code object for this function. So from call to call

this is the same list object.

When you call foo the first time, the list is extended to [1],

after the second call it is [1, 1] and so on. |

Thus foo has a non-obvious side-effect, the chosen default value for a

changes from function call to function call.

Choosing mutable default values for function arguments can introduce

nasty and hardly detectable bugs. Never use mutable default values

like lists, sets, dictionaries. Immutable default values of type

int, float, bool, str, tuple don’t cause this problem. Same

for None.

|

To avoid this issue, replace mutable default values with None and handle this

default value within the function:

1

2

3

4

5

6

7

8

9 # mutable_default_fixed.py

def foo(a=None):

if a is None:

a = []

a.append(1)

return len(a)

print(foo())

print(foo())

Now you get a reproducible result:

1

2

3 $ python mutable_default_fixed.py

1

1

4.7. Consistency

Consistency relates to consistent code style, consistent naming and also to consistent error handling.

Choose a code style for your project and stick to it.

This is important when you develop code in a team but also for one-man projects. Mixing code styles produces a confusing result, and sticking to the same style makes you feel more comfortable reading or extending parts developed by others.

We recommend PEP 8. This is the most often followed style guide for Python code nowadays, and if you get used to it, you find most exising Python code on the internet or from other sources plesant to read.

Beyond that, the more people follow PEP 8, the more consistent Python code gets overall, not only in distinct projects.

| If you work on an existing project which did not follow PEP 8, refuse to mix the existing style with PEP 8. Code with mixed styles looks very inconsistent and confusing. |

For example choose either idx or index as names or part of names all over

your code.

Consistent naming makes life for programmers much easier by reducing searches for details of given names in the source code.

In a former project I had to read and process text input files with different

content. I choose consistent function names like read_site_from_file,

read_source_from_file, check_site, check_source, insert_site_into_db

and insert_source_into_db etc.

Another example: You develop a program requesting data from different web

services. Then consistency would be increased if you use the term fetch

throughout in variable and functions names, but also in comments. Inconsistent

code would mix fetch and similar terms like retrieve or get.

Functions can fail for different reasons. Be consistent to choose a fixed strategy to handle a specific reason.

E.g. assume within our current project some functions have an identifier as

argument. All such functions should either return None if this identifier is

invalid or raise and (e.g.) ValueError exception, but this should not be mixed.

This makes life for you much easier to implement error handilng if you call such functions.

4.8. Minimize indentation

Deep indentation should be avoided, especially if code spans more than a single page on your screen.

Personal anecdote: I once was asked to optimize some slow code. Printed on paper the code spanned 20 pages without declaring any single function. Loops and branches were deeply nested and often spanned multiple pages. After rewriting the code using functions and reducing indentation we could spot and fix the slow parts within half an hour.

The following example computes the number of real dividers of natural numbers in a given range. "real dividers" means dividers which are not one or the number itself.

1

2

3

4

5

6

7

8

9

10

11

12 # count_dividers.py

def main(n):

number_dividers = {}

for number in range(2, n):

dividers = 0

for divider in range(2, number):

if number % divider == 0:

dividers += 1 (1)

number_dividers[number] = dividers

return number_dividers

print(main(10))

| 1 | This line has maximal indentation of 16 spaces. |

1

2 $ python count_dividers.py

{2: 0, 3: 0, 4: 1, 5: 0, 6: 2, 7: 0, 8: 2, 9: 1}

Now we move the inner two loops into a separate function count_dividers.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15 # count_dividers_with_function.py

def count_dividers(number):

dividers = 0

for divider in range(2, number):

if number % divider == 0:

dividers += 1 (1)

return dividers

def main(n):

number_dividers = {}

for number in range(2, n):

number_dividers[number] = count_dividers(number)

return number_dividers

print(main(10))

| 1 | This line has maximal indentation by 12 spaces now. |

Pythons break statement only breaks out of the current loop. To break

out of multiple nested loops usually an extra variable tracking the state

of the iterations is required.

The following slightly unrealistic example demonstrates this: for n taking values 5, 10 and 15 it searches for the first pair of natural numbers which multiplied yield n:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16 # multiple_breaks.py

def main():

for n in (5, 10, 15):

found = False

for i in range(2, n):

for j in range(2, n):

if i + j == n:

print(i, j)

found = True (1)

break

if found: (2)

break

main()

| 1 | We need a variable to inform the loop over i that the inner loop

over j found a match |

| 2 | And now we need an extra break here. |

If me move the two nested loops into a separate function, return allows

us to break out much easier:

1

2

3

4

5

6

7

8

9

10

11

12

13 # multiple_breaks_with_return.py

def check(n):

for i in range(2, n):

for j in range(2, n):

if i * j == n:

print(i, j)

return

def main():

for n in (5, 10, 15):

check(n)

main()

So the next time you seed the pattern from the first example, consider to move the affected loops into a separate function.

The following checks if a given number is prime. It raises an exception when the number is not larger than 1.

1

2

3

4

5

6

7

8

9

10

11

12

13

14 # no_early_exit.py

def is_prime(n):

if n >= 1:

divider = 2

while divider * divider <= n:

if n % divider == 0:

return False

divider += 1

return True

else:

raise ValueError("need number >=1")

assert is_prime(17) is True

assert is_prime(14) is False

Checking fist and raising and exception allows us to implement the actual prime check with less indentation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14 # early_exit.py

def is_prime(n):

if n < 1:

raise ValueError("need number >=1")

divider = 2

while divider * divider <= n:

if n % divider == 0:

return False

divider += 1

return True

assert is_prime(17) is True

assert is_prime(14) is False

The same technique applies for if within loops. Instead of

1

2

3 for n in numbers:

if valid(n):

compute(n)

you can check the opposite and use continue to skip the computation:

1

2

3

4 for n in numbers:

if not valid(n):

continue

compute(n)

The visual effect of this technique is more striking if you replace

compute by a longer code section, maybe also containing more loops and branches.

4.9. How to achieve a row length of maximum 79 or 99 characters in Python ?

There are multiple ways to split a long line into multiple shorter lines. To fix the following line, which is longer than the 79 characters recommended by PEP 8 …

1 this_is_a_long_variable = computation_result_long_abc * computation_result_long_def

we can use \ to indicate that the current line will continue with

the next line:

1

2 this_is_a_long_variable = computation_result_long_abc \

* computation_result_long_def

The Python interpreter also ignores line breaks if opening brackets are not closed yet:

1

2 this_is_a_long_variable = (computation_result_long_abc

* computation_result_long_def)

Or

1

2

3

4

5

6

7

8 addresses = [("peter", "muller", "8001", "zurich"),

("tim", "schmid", "8048", "altstetten"),

]

settings = {"numerics" : {"eps": 1e-3,

"max_iter": 1000},

"output_folder: "/tmp/results",

}

This also works for import:

1

2

3 from math import (cos,

sin,

tan)

Another not widely known feature of Python is automatic string concatenation:

1

2

3 text = ("abc" "def"

"ghi")

assert text == "abcdefghi"

which allows us breaking longer strings over multiple lines. The alternative approach using Pythons multi line strings

1

2 text = """abcdefj

ghi"""

introduces unwanted spaces and a \n.

| In case these methods result in expressions spanning multiple lines, it also can be helpfull to use temporary or explanatory variables to increase readability. This applies escpecially for longer expressions, e.g. mathematical formulas. |

5. Git introduction

git is a so called distributed version control system. It supports working on the same code base by a team of developers, but can also be used within one-man projects. The term version control system, also abbreviated as VCS, means that you can track past changes on your files and that you can also restore them or undo previous changes.

git is a kind of time machine for your code. git also allows managing

different versions of your code in the same folder structure. So with git you don’t

need to encode versions into file or folder names anymore. Say good bye to

script_24-12-2017.py and analysis_01_tim_special.py and look forward to a

clean and well organized code base.

|

Often people heard of git in the context of the code repositories

github.com or gitlab.com, but git can be used on a single machine without

any connection to these repositories.

git was released in 2005 by the Linux developer community. They had to replace their existing VCS and found no suitable alternative to manage contributions from hundreds of developers on a huge code base spanning millions of lines.

| git_s command line interface is a complex beast. For daily work you need only a small fraction of the available commands and options. For rare use cases and special solutions to _git related problems search at stackoverflow.com. |

| git was developed to manage source code or other text files as the asciidoc files for this book, but is not suited to manage binary and / or large files of several MB or larger. Look for git annex and git lfs if your code base contains such files. |

5.1. Installing git

-

On Mac OS X: Fetch the installer from https://git-scm.com/download/mac or use homebrew.

-

On Windows: https://git-scm.com/download/windows. If you run the installer make sure to select

Install Git from Git Bash onlywhen asked. Also install notepad++. -

On Linux: Use your package manager, e.g. on

Ubuntuthe command isapt-get install git.

All following instructions are on the command line within a terminal. Windows users should find a program called git bash after successful installation which offers a terminal.

To check if git works:

1

2 $ git --version

git version 2.11.0

The version number shown on your computer might be different, an exact match is not required for our instructions.

We introduce git here on the command line. Most IDEs nowadays implement their own

user interface to interact with git, but they differ. As most solutions on

stackoverflow.com are instructions at the

command line, you should know how to use git in the terminal anyway.

|

5.2. Initial Configuration

The following commands should be issued once after installing git. They set required and

reasonable default values.

Adapt the values for user.name and user.email to your personal data:

1

2 git config --global user.name "Uwe Schmitt"

git config --global user.email "uwe.schmitt@id.ethz.ch"

On Windows you should find a file named .gitconfig in your home folder.

Open this file with a text editor and add the lines:

[core] editor = 'C:\\Program Files\\Notepad++\\notepad++.exe'

Maybe you have to adapt this if notepad++ is installed in a different

folder, the single quotes and double backslashes are important !

Now run

1 git config --global core.autocrlf true

to configure line handling, more about this below.

You might adapt the the core.editor setting to your preferred editor, the

second setting of core.autocrlf must not be changed:

1

2 git config --global core.editor /usr/bin/nano

git config --global core.autocrlf input

Windows has a encoding for line endings different to the \n used on Mac OS X and Linux.

This can cause problems if you use git within a team where developers use different operating

systems. Without the specific settings for core.autocrlf above, files with

contain the same text will be considered to be different by git because of the

mentioned discrepancy in line endings.

Even if you intend to use git locally only, don’t skip this setting. You probably will forget this when you start to use git for distributed development in the future.

When you run

1 git config --global -e

your configured editor should show up. Don’t modify the shown file, close the editor immediately.

5.3. Fundamental concepts

We introduce a few underlying concepts of git here.

| Not all our explanations below are 100% exact. We omit some deeply technical details and instead prefer abstractions and concepts which help you to learn and understand git for your daily work. |

5.3.1. Patches

A patch is a text snippet which describes the transformation of a given file to a modified version. Usually such patches are created by a computer.

Let’s start with a file before modification

1

2 def rectangle_area(width, height):

return width + height

which we fix and improve to:

1

2

3 def rectangle_area(width, height):

"""computes area of a rectangle"""

return width * height

Then this is the patch describing those changes:

1

2

3

4

5 @@ -1,2 +1,3 @@

def rectangle_area(width, height):

- return width + height

+ """computes area of a rectangle"""

+ return width * height

Lines 3-5 describe the modification: If we remove starting with -

from the first version and add the lines starting with + we get

the modified file.

|

5.3.2. Commits

A commit consists of a single or multiple related patches. The history is an ordered list of commits.

When saying that git is a kind of time machine for your code, this does not work without your contribution: Commits are not created automatically by git, but you have to decide what changes contribute to a commit and to instruct git to create one.

This is an example how such a history looks like. The most recent entry is a the top.

1

2

3

4

5

6

7

8

9

10

11

12 $ git log (1)

commit 3e6696e23eb41b19f4119f8327cd2b9fd5e462c9 (2)

Author: Uwe <uwe.schmitt@id.ethz.ch> (3)

Date: Fri Apr 27 23:56:42 2018 +0200 (4)

fixed upper limit + added docstring (5)

commit 450b10eb8405130a7e9d7753998b0475d68cd1c8 (6)

Author: Uwe <uwe.schmitt@id.ethz.ch>

Date: Fri Apr 27 23:50:22 2018 +0200

first version of print_squares.py

| 1 | git log shows the history of our git history, more about this command later. |

| 2 | This is the latest commit. the long string after commit is the unique commit id. |

| 3 | The commits author. |

| 4 | The commits time stamp. |

| 5 | Every commit has a commit message describing the commit. |

| 6 | This is the commit before the entry we’ve seen above, compare the time stamps ! |

A commit id is a random string consisting of fourty symbols chosen from 0

.. 9 and a .. f. If we use commit ids in git commands, it is usually

sufficient to use the first eight to ten characters to identify the related commit.

And this is the same history, this time including patches:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33 $ git log -p (1)

commit 3e6696e23eb41b19f4119f8327cd2b9fd5e462c9

Author: Uwe <uwe.schmitt@id.ethz.ch>

Date: Fri Apr 27 23:56:42 2018 +0200

fixed upper limit + added docstring

diff --git a/print_squares.py b/print_squares.py (2)

index 140c0a6..18fce63 100644

--- a/print_squares.py

+++ b/print_squares.py

@@ -1,3 +1,4 @@

def print_squares(n):

- for i in range(1, n):

+ """prints squares of numbers 1 .. n"""

+ for i in range(1, n + 1):

print(i, "squared is", i ** 2) (3)

commit 450b10eb8405130a7e9d7753998b0475d68cd1c8

Author: Uwe <uwe.schmitt@id.ethz.ch>

Date: Fri Apr 27 23:50:22 2018 +0200

first version of print_squares.py

diff --git a/print_squares.py b/print_squares.py

new file mode 100644

index 0000000..140c0a6

--- /dev/null (4)

+++ b/print_squares.py

@@ -0,0 +1,3 @@

+def print_squares(n):

+ for i in range(1, n):

+ print(i, "squared is", i ** 2)

| 1 | git log -p additionally shows the patches related to every commit. |

| 2 | This is where the patch for the latest commit starts. |

| 3 | And this is where the patch for the latest commit ends. |

| 4 | This is a special patch. The /dev/null indicates that the file did not

exist before this commit. We see only added lines in the patch, thus this

commit describes the very first version of print_squares.py. |

You might have several changes in your files, but done for different reasons. Maybe one file was changed to fix a bug and changes in two other files implement a new feature of your software. Good practice is to create two commits for this. The first commit should contain the patches for fixing the bug, the other commit implements the new feature.

Commits should also be small. So in case you program daily, better create commits several times a day instead once a week.

One reason for this is that git e.g. allows undoing a previous commit as a whole, so undoing changes gets difficult if you only want to undo a part of a commit.

Try to separate commits which clean or restructure code, commits which fix bugs and commits adding or changing functionality.

Tasks like "I thought I fixed this bug, let’s find and inspect the related commit", or "What code changes actually implemented the new database scheme" will much easier if you follow these recommendations.

5.3.3. Staging Area / Index

To generate a commit we first must create patches and collect them in the

so called staging area, also named index.

We learn later the git add command which exactly does this.

5.4. Basic Git Hands-On

We introduce now a few git commands in a hands-on session. Please reproduce all steps on your computer. Typing helps to memorize the various git commands.

1

2

3 $ cd (1)

$ mkdir -p tmp/git_intro (2)

$ cd tmp/git_intro (3)

| 1 | An empty cd changes the working directory to your home folder. |

| 2 | We create a folder for the hands-on, the -p also creates the tmp folder if needed. |

| 3 | We cd into this folder. This is now our working directory for the following hands-on. |

To put the current folder under version control, we create a local git repository

using git init.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15 $ git init . (1)

Initialized empty Git repository in /Users/uweschmitt/tmp/git_intro/.git/

$ ls -al (2)

total 0

drwxr-xr-x 3 uweschmitt staff 96 Jun 2 23:12 .

drwxr-xr-x 37 uweschmitt staff 1184 Jun 2 23:12 ..

drwxr-xr-x 10 uweschmitt staff 320 Jun 2 23:12 .git

$ git status (3)

On branch master

No commits yet

nothing to commit (create/copy files and use "git add" to track)

| 1 | The . refers to the current working directory. The message you see in the following

line will be different on your computer. |

| 2 | The .git folder indicates that the current folder (and all future sub-folders)

are now under version control. This folder contains all data managed by git, e.g.

all commits. Never remove the .git folder ! |

| 3 | git status tells us that the repository is still empty. |

From now on the folder containing the .git directory and all files and

subfolders are under version control.

|

| Never create a git repository within an existing repository. |

We create a file prime_number.py in the current directory with the following content:

1

2

3

4

5

6

7 def is_prime(n):

divisor = 2

while divisor * divisor <= n:

if n % divisor == 0:

return False

divisor += 1

return True

Then we create our first commit:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16 $ git add prime_number.py (1)

$ git status

On branch master

No commits yet

Changes to be committed: (2)

(use "git rm --cached <file>..." to unstage)

new file: prime_number.py

$ git commit -m "first version of prime_number.py" (3)

[master (root-commit) b5f618d] first version of prime_number.py

1 file changed, 7 insertions(+)

create mode 100644 prime_number.py

| 1 | We add the new file to the staging area. |

| 2 | git status tells us that there is a new file in the staging area. |

| 3 | We create a commit, after -m you see the commit message we choose for this commit.

Just issuing git commit would open the configured editor where we can enter and save the commit message. |

1

2 $ git log

fatal: your current branch 'master' does not have any commits yet

Now we add a new function to prime_number.py:

1

2

3

4

5

6

7

8

9

10 def is_prime(n):

divisor = 2

while divisor * divisor <= n:

if n % divisor == 0:

return False

divisor += 1

return True

def primes_up_to(n):

return [i for i in range(2, n + 1) if is_prime(i)]

And a new file test_prime_number.py in the same folder:

1

2

3

4 from prime_number import *

up_to_11 = primes_up_to(11)

assert up_to_11 == [2, 3, 5, 7, 11], up_to_11

git diff tells us differences relative to the last commit:

1

2

3

4

5

6

7

8

9

10

11

12 $ git diff

diff --git a/prime_number.py b/prime_number.py

index 75e70ec..4b54780 100644

--- a/prime_number.py

+++ b/prime_number.py

@@ -5,3 +5,6 @@ def is_prime(n):

return False

divisor += 1

return True

+

+def primes_up_to(n):

+ return [i for i in range(2, n + 1) if is_prime(i)]

and git status shows us that there is a new file not under version control yet:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20 $ git status

On branch master

Initial commit

Changes to be committed:

(use "git rm --cached <file>..." to unstage)

new file: prime_number.py

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git checkout -- <file>..." to discard changes in working directory)

modified: prime_number.py

Untracked files:

(use "git add <file>..." to include in what will be committed)

test_prime_number.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14 $ git add prime_number.py (1)

$ git add test_prime_number.py (2)

$ git status (3)

On branch master

Changes to be committed:

(use "git reset HEAD <file>..." to unstage)

modified: prime_number.py

new file: test_prime_number.py

$ git commit -m "added new function and test script" (4)

[master cb29a6b] added new function and test script

2 files changed, 7 insertions(+)

create mode 100644 test_prime_number.py

| 1 | We add the patch for the changes in prime_number.py to the staging area. |

| 2 | We add the new file test_prime_number.py to the staging area. |

| 3 | git status now confirms the state of the staging area holding patches related to the two files. |

| 4 | We create a new commit. |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28 $ git show (1)

commit cb29a6b5974ba6a571e12209e18753d480761849

Author: Uwe <uwe.schmitt@id.ethz.ch>

Date: Sat Jun 2 23:13:33 2018 +0200

added new function and test script

diff --git a/prime_number.py b/prime_number.py (2)

index 75e70ec..4b54780 100644

--- a/prime_number.py

+++ b/prime_number.py

@@ -5,3 +5,6 @@ def is_prime(n):

return False

divisor += 1

return True

+

+def primes_up_to(n):

+ return [i for i in range(2, n + 1) if is_prime(i)]

diff --git a/test_prime_number.py b/test_prime_number.py (3)

new file mode 100644

index 0000000..1cac7da

--- /dev/null

+++ b/test_prime_number.py

@@ -0,0 +1,4 @@

+from prime_number import *

+

+up_to_11 = primes_up_to(11)

+assert up_to_11 == [2, 3, 5, 7, 11], up_to_11

| 1 | git show shows the latest commit. We see that this commit contains two patches. |

| 2 | This is the first patch. |

| 3 | And this is the second patch. |

5.4.1. Summary

These are the commands we used up to now:

-

git addto add new or modified files to the staging area -

git commit -m '…'to create the commit. -

git diffshows current changes relative to the last commit. -

git statusshows the current status of the repository. -

git logshows the history of the repository. -

git showto show the latest commit.

5.5. What’s next ?

5.5.1. Other helpfull commands

-

git log --onelineto show a compressed history with one line per commit. -

git diff --cachedto inspect the staging are -

git show COMMIT_IDto show a commit having idCOMMIT_ID. -

git blame FILEshows each line in the givenFILEwith information from -

git resetto empty the staging area in case you added a file unintendedly. -

git commit --amendto add the current staging area to the latest commit. Usefull if you forgot to add some files in the latest commit. the commit which last modified the line.` -

git checkout FILEresets the namedFILEto the latest version. -

git checkout .resets all files and folders in the current working directory to the latest git version. -

git rmto remove files under version control. -

git mvto rename or move files under version control.

5.5.2. The .gitignore file

git status will also show backup files from your editor or .pyc files or

the __pycache__ folder. To ignore such files and folders you can create a

file named .gitignore, usually in the main folder of the repository. My

repositories usually have a .gitingore file including the following lines:

1

2

3 **/*.pyc (1)

**/.*.sw? (2)

**/__pycache__ (3)

| 1 | Ignores all files ending with .pyc in all subfolders (**). |

| 2 | Ignores all files like .primes.py.swp or .primes.py.swo which are

backup files from vim. |

| 3 | Ignores files and folders named __pycache__ in all subfolders. |

-

Also commit the

.gitignorefile to your repository. -

Add all huge or binary files to

.gitignore.

5.6. How to bring existing source code under version control.

git init . also works is the current folder contains existing code. So to introduce

version control for an existing project follow the following instructions:

-

cdto your projects root folder. -

git init . -

git statusand look for files, folders and name patterns you don’t want to add. -

Create a

.gitignorefile to exclude these. -

git add …for all files or folders you want to bring under version control. -

git resetresets the staging area if you added unwanted files or folders. -

git add .gitignore -

git commit -m "initial commit"

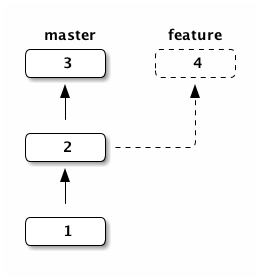

5.7. About branches

Branches support maintaining different versions of source code in the same

git repository. Below you see an example showing two branches master

and feature. In the example below, the master branch is

active, and git log will show you commits 3, 2 and then 1.

The files and folders in your git repository will also correspond

to the commits from this branch.

If you checkout (activate) the branch feature your files will correspond to

the commits from the history 4, 2 and 1:

So branches allow switching of files and folders in your repository to manage different versions of your code.

Branches can also be merged. Here we merged feature into master, this created

a new commit 5 combining the changes introduced in commits 3 and 4:

The master branch is the default branch, which we used all the time, and

which you see in the output of git status from the previous hands-on session.

-

Try to keep the

masterbranch clean and working. -

If you implement a new feature create a new branch for this. When development is complete and your changes are tested you can merge this branch back into

master. This also allows bug-fixes in themasteror a dedicated bug-fix branch when the work onfeatureis not completed. -

Use branches for different versions of your software. So you can fix older bugs even if the development proceeded meanwhile. This helps when users don’t want the most current version (could break their applications), but still need a bug-fix.

-

We see later how to use branches if you want to step back in time to inspect your repository some commits ago.

5.7.1. Branching and Merging Hands-On

The following examples are based on the repository we used during the first hands-on session teaching basic git commands.

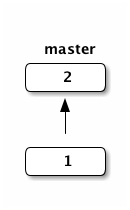

git branch shows available branches, and the active branch is marked with *:

1

2

3

4

5

6 $ git branch (1)

* master

$ git log --oneline (2)

897d002 added new function and test script

3c6205c first version of prime_number.py

| 1 | We have only one branch in the repository up to now. |

| 2 | This is the current history of the master branch. |

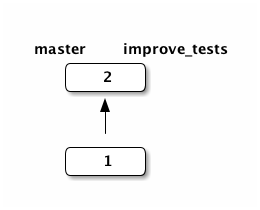

And the following sketch depicts the state of the master repository:

Now we create a new branch:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15 $ git branch improve_tests (1)

$ git branch (2)

improve_tests

* master

$ git checkout improve_tests (3)

Switched to branch 'improve_tests'

$ git branch (4)

* improve_tests

master

$ git log --oneline (5)

897d002 added new function and test script

3c6205c first version of prime_number.py

| 1 | git branch improve_tests creates a new branch with the given name. |

| 2 | We see now both branches, master is still the active branch. |

| 3 | git checkout improve_tests activates the new branch. |

| 4 | We see both branches again, but the active branch changed. |

| 5 | The new branch still has the same history as the master branch. |

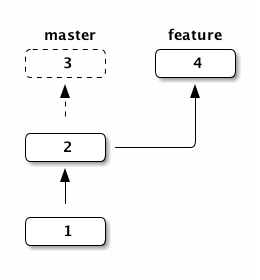

This image reflects the state of our repository: two branches with the same history:

Now we extend the test_prime_numer.py by adding two new lines at the end

of the file:

1

2

3

4

5

6 from prime_number import *

up_to_11 = primes_up_to(11)

assert up_to_11 == [2, 3, 5, 7, 11], up_to_11

assert primes_up_to(0) == []

assert primes_up_to(2) == [2]

Let’s inspect the changes and create a new commit in our new branch:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21 $ git diff

diff --git a/test_prime_number.py b/test_prime_number.py

index aaf9064..b258c6d 100644

--- a/test_prime_number.py

+++ b/test_prime_number.py

@@ -2,3 +2,5 @@ from prime_number import *

up_to_11 = primes_up_to(11)

assert up_to_11 == [2, 3, 5, 7, 11], up_to_11

+assert primes_up_to(0) == [] (1)

+assert primes_up_to(2) == [2]

$ git add test_prime_number.py

$ git commit -m "improved tests"

[improve_tests 2925e4d] improved tests

1 file changed, 2 insertions(+)

$ git log --oneline

2925e4d improved tests

1d864cd added new function and test script

064a280 first version of prime_number.py

| 1 | These are the two new lines. |

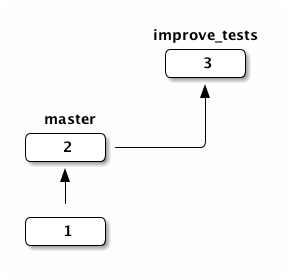

The branch feature_branch now has a third commit and now deviates from the

master branch:

Let’s switch back to master and check test_prime_number.py:

1

2

3

4

5

6

7

8 $ git checkout master (1)

Switched to branch 'master'

$ cat test_prime_number.py (2)

from prime_number import *

up_to_11 = primes_up_to(11)

assert up_to_11 == [2, 3, 5, 7, 11], up_to_11

| 1 | We activate the master branch again. |

| 2 | And we see that the test_prime_number.py script is without the recent

changes we did in the improve_tests branch. |

Next we improve our is_prime function. We handle even values of n first

and then reduce the divisors to odd numbers 3, 5, 7, …:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16 def is_prime(n):

if n == 2:

return True

if n % 2 == 0:

return False

divisor = 3

while divisor * divisor <= n:

if n % divisor == 0:

return False

divisor += 2

return True

def primes_up_to(n):

return [i for i in range(2, n + 1) if is_prime(i)]

We commit these changes:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17 $ git add prime_number.py

$ git commit -m "improved is_prime function"

*** Please tell me who you are.

Run

git config --global user.email "you@example.com"

git config --global user.name "Your Name"

to set your account's default identity.

Omit --global to set the identity only in this repository.

fatal: unable to auto-detect email address (got 'root@f54e1484dc35.(none)')

$ git log --oneline

fatal: your current branch 'master' does not have any commits yet

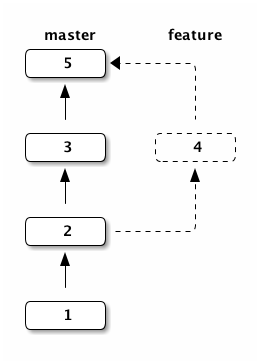

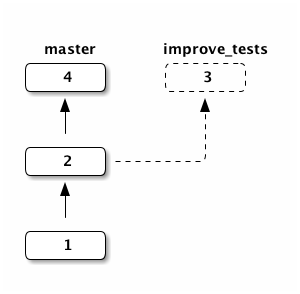

And this is the current state of the repository:

Now we merge the improve_tests branch into master:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17 $ git branch (1)

improve_tests

* master

$ git merge improve_tests (2)

Merge made by the 'recursive' strategy.

test_prime_number.py | 2 ++

1 file changed, 2 insertions(+)

$ git log --oneline --graph (3)

* da3a460 Merge branch 'improve_tests'

|\

| * 55c9744 improved tests

* | cef78db improved is_prime function

|/

* 6f98631 added new function and test script

* a52a49e first version of prime_number.py

| 1 | We make sure that master is the active branch. |

| 2 | We merge improve_tests into the active branch. |

| 3 | This shows the history with extra symbols sketching the commits from merged branches.

We also see, that git merge created a new commit with an automatically generated message. |

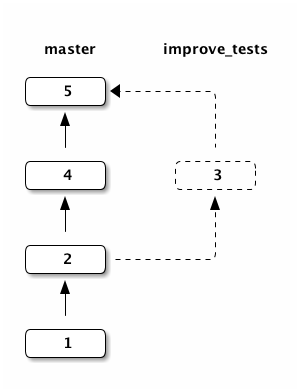

And this is the current state of our repository:

A so called merge conflict arises when we try to merge two branches with overlapping changes.

In this case git merge fails with an corresponding message, git status also

shows a Unmerged paths section with the affected files.

If you open an affected file you will find sections like

<<<<<<< HEAD while i < 11: j += i ======= while i < 12: j += i ** 2 >>>>>>> branch-a

The two sections separated by marker lines <<<<<< HEAD, ======= and

>>>>>> branch-a show the conflicting code lines. branch-a is here the name

of the branch we want to merge, and as such can differ from merge to merge.

The computer is not able to determine the intended merge of the shown sections, instead human intervention is needed. Thus the users has to edit the file and decide how the code should look like after merging. The markers also have to be removed.

We could e.g. rewrite the example fragment as:

while i < 11: j += i ** 2

The full procedure to resolve the merge conflict(s) is as follows:

-

Open a file with an indicated merge conflict in your editor.

-

Find the conflicting sections.

-

Bring the two parts lines between

<<<<<<and>>>>>>to the intended result without the three marker lines. -

If done for all conflicting sections save the file and quit the editor.

-

git add FILE_NAMEfor the affected file. -

Repeat from 1. until you resolved all merge conflicts.

-

git commit(without any message) completes the merge process.

Between the failing git merge and the final git commit your

repository is in an intermediate state. Always complete resolving a merge

conflict, or use git merge abort to cancel the overall merging process.

|

5.8. Remote repositories

Up to now we worked with one local git repository. Beyond that git allows synchronization of different repositories located on the same or remote computers.

Known hosting services such as github.com and gitlab.com manage local git

repositories on their servers and offer a convenient web interface for

additional functionalities supporting software development in a team.

The open-source software gitlab-ce (gitlab community edition) is

the base for the free services at gitlab.com and can be installed

with little effort. This enables teams or organizations to run their

own gitlab instance.

There are different use-cases for remote repositories:

-

You want to share your code. For general availability of your code you can use so called public repositories, for a limited set of collaborators you can use private repositories. Public repositories allow others to implement changes or bug-fixes and offer them as so called merge requests (

gitlab.com) or pull requests (github.com). -

You want work on the same software project in a team.

-

You develop and run code on different machines. A remote repository can be used as an intermediate storage.

-

Last but not least, a remote repository can be used as a backup service.

To reproduce the following examples you need

an account on gitlab.com or github.com. To create an account you have to

provide your email address and your name, after that you should receive an

email containing a link to complete your registration and to set you password.

To setup passwordless connection of the command line git client to your remote repositories, we need a key pair.

Run this in your terminal:

1

2

3

4

5

6 $ test -f ~/.ssh/id_rsa.pub || ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa.pub (1)

$ cat ~/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQCeBGRBsNYSD5RBSDFdC (2)

....

....

+w== uweschmitt (3)

| 1 | You already might have created a key pair (e.g. for passwordless ssh

access to other computers). Here we run ssh-keygen only if no such key pair

exists. |

| 2 | This is where the public key starts |

| 3 | And this is where it ends, we ommitted some lines. |

Copy the public key, then

Choose a name and create a project:

-

gitlab.comusers follow read here until '5. Click Create project.' -

github.comusers read here.

Next we have to determine the URL for ssh access to the repository.

This URL has the format git@gitlab.com:USERNAME/PROJECT_NAME.git or

git@github.com:USERNAME/PROJECT_NAME.git.

On gitlab.com you find the exact URL on the top of the projects page, on

github.com it is shown when you press the Clone or download button.

Using this URL we can now connect our existing git repository to the remote:

1

2

3

4

5

6

7

8

9

10

11

12

13 $ git remote add origin git@gitlab.com:book_eth/book_demo.git (1)

$ git remote -v (2)

origin git@gitlab.com:book_eth/book_demo.git (fetch)

origin git@gitlab.com:book_eth/book_demo.git (push)

$ git push origin --all (3)

remote: